Develop and deliver

Overview

This page mostly showcase work where I'm exploring ideas and visualising complex system processes. There are also samples where I used generative AI as part of my process like storyboarding.

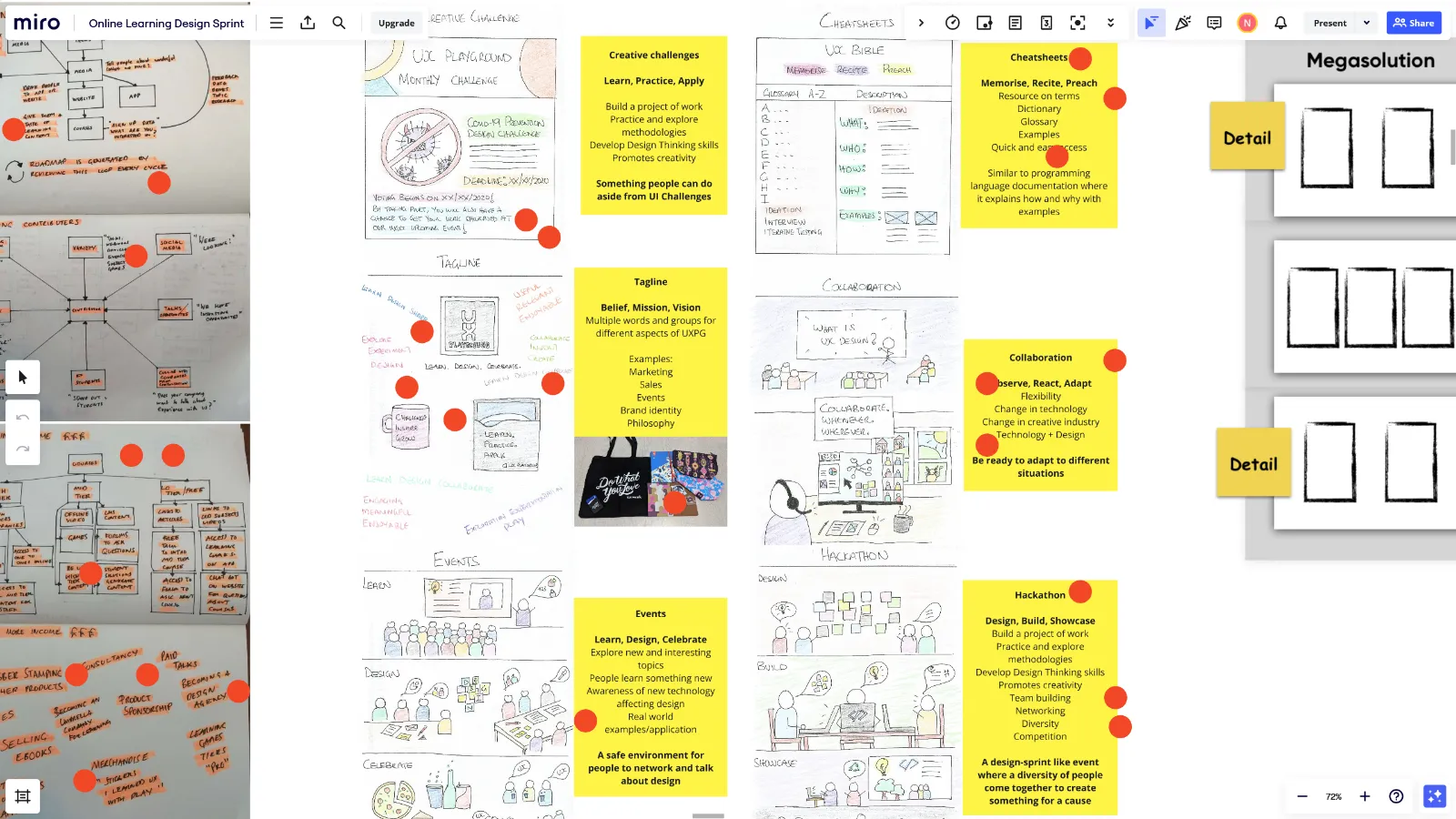

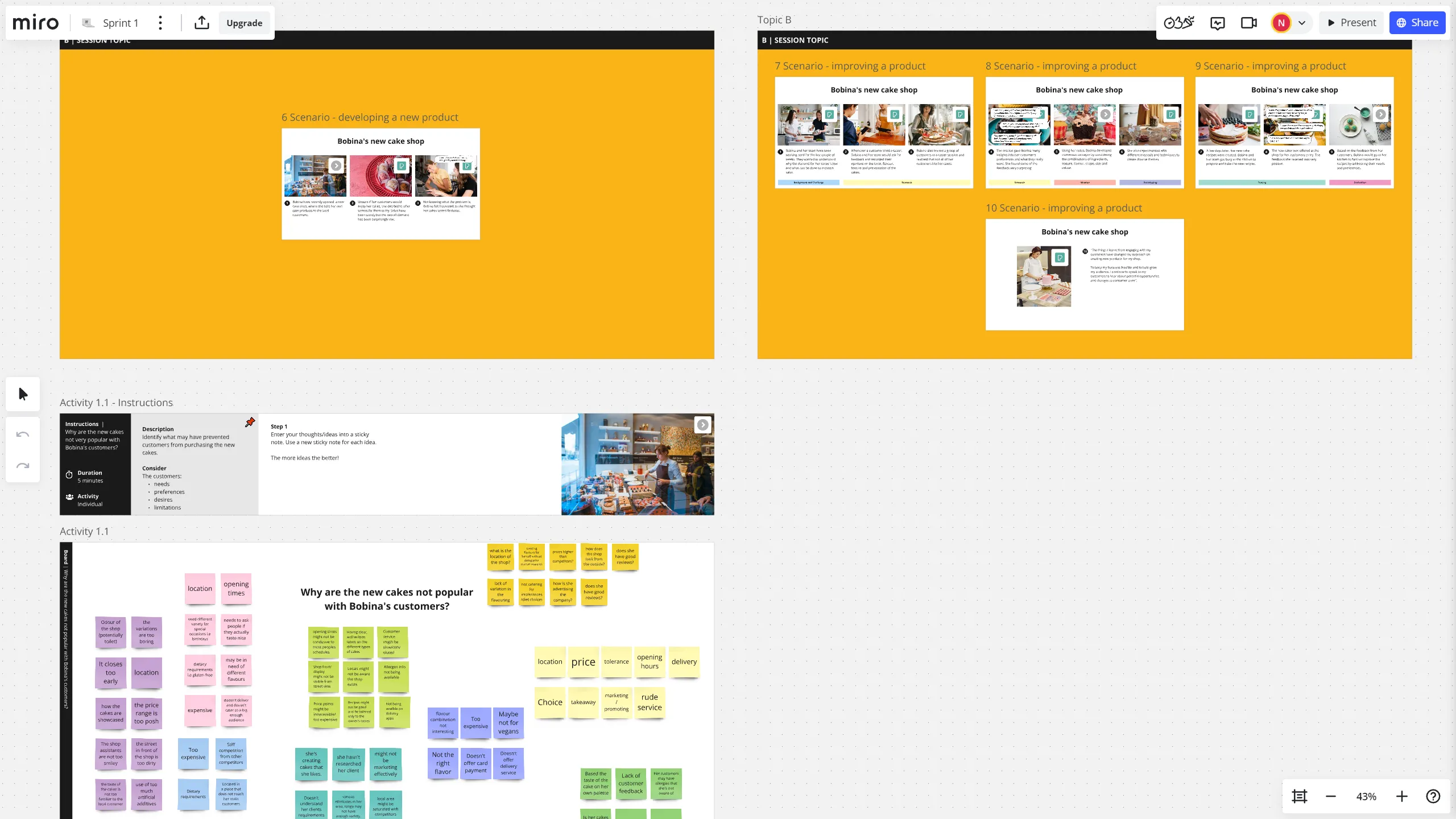

Sketching: 2-day design sprint on how to transition into online course delivery

At UX Playground, we did a design sprint to explore approaches for transitioning an in-person UX course to an online format. During the first session, I enjoyed sketching concepts focused on community interaction and how to promote the new course, such as design hackathons and community challenges. In a later group voting session, a common theme emerged around offering a variety of activities that foster interaction within the community like collaborative projects and learning sessions.

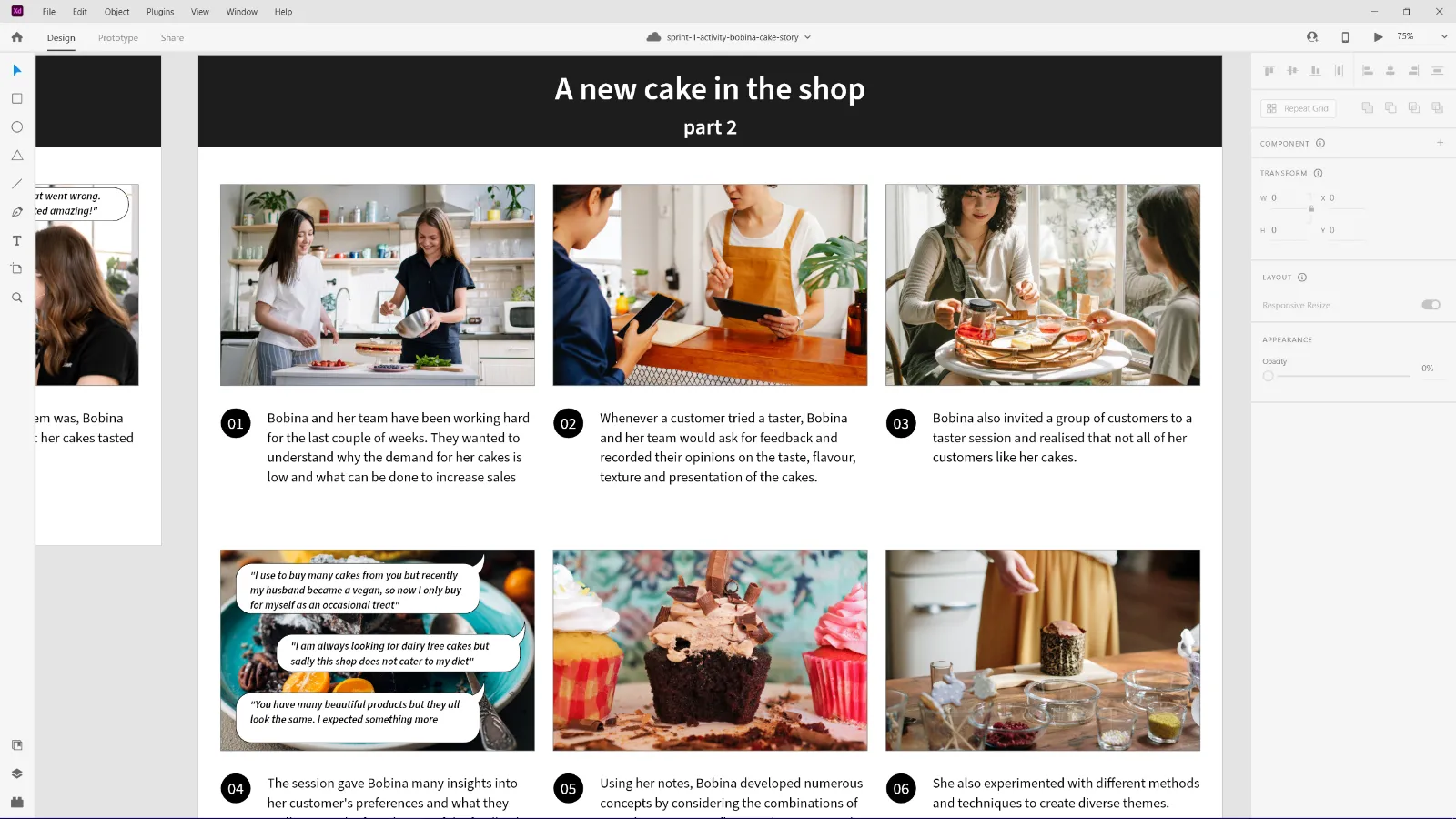

Storyboarding: cake baking as an example to explain the UX process

For the development of UX Playground's UX course, the goal was to create content and activities to teach UX design for non-design professionals. For one of the introduction sessions, I developed a storyboard using the example of a baker running a cake shop. I chose this example because previously it helped me to learn about the UX process when I was a front-end developer.

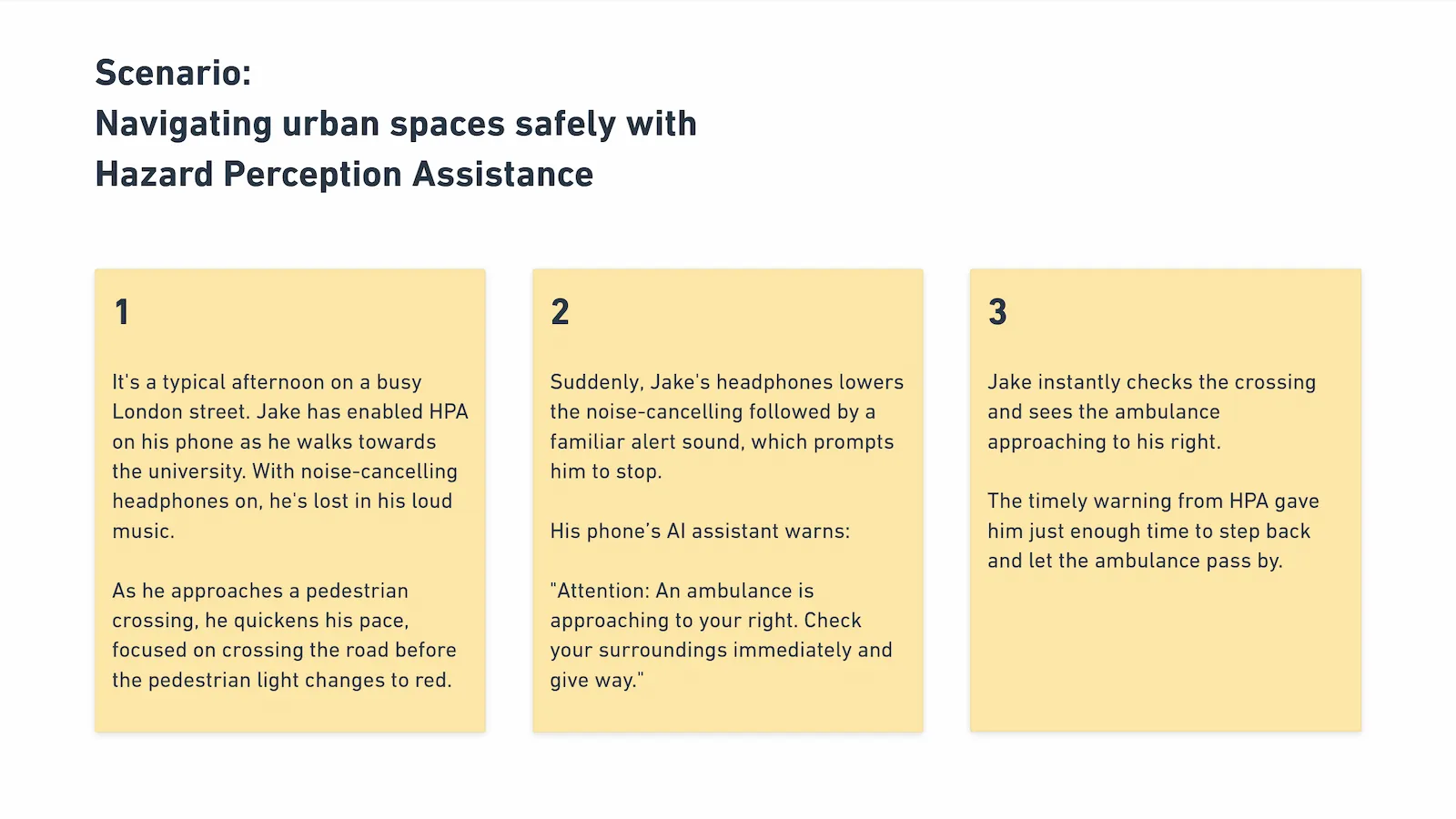

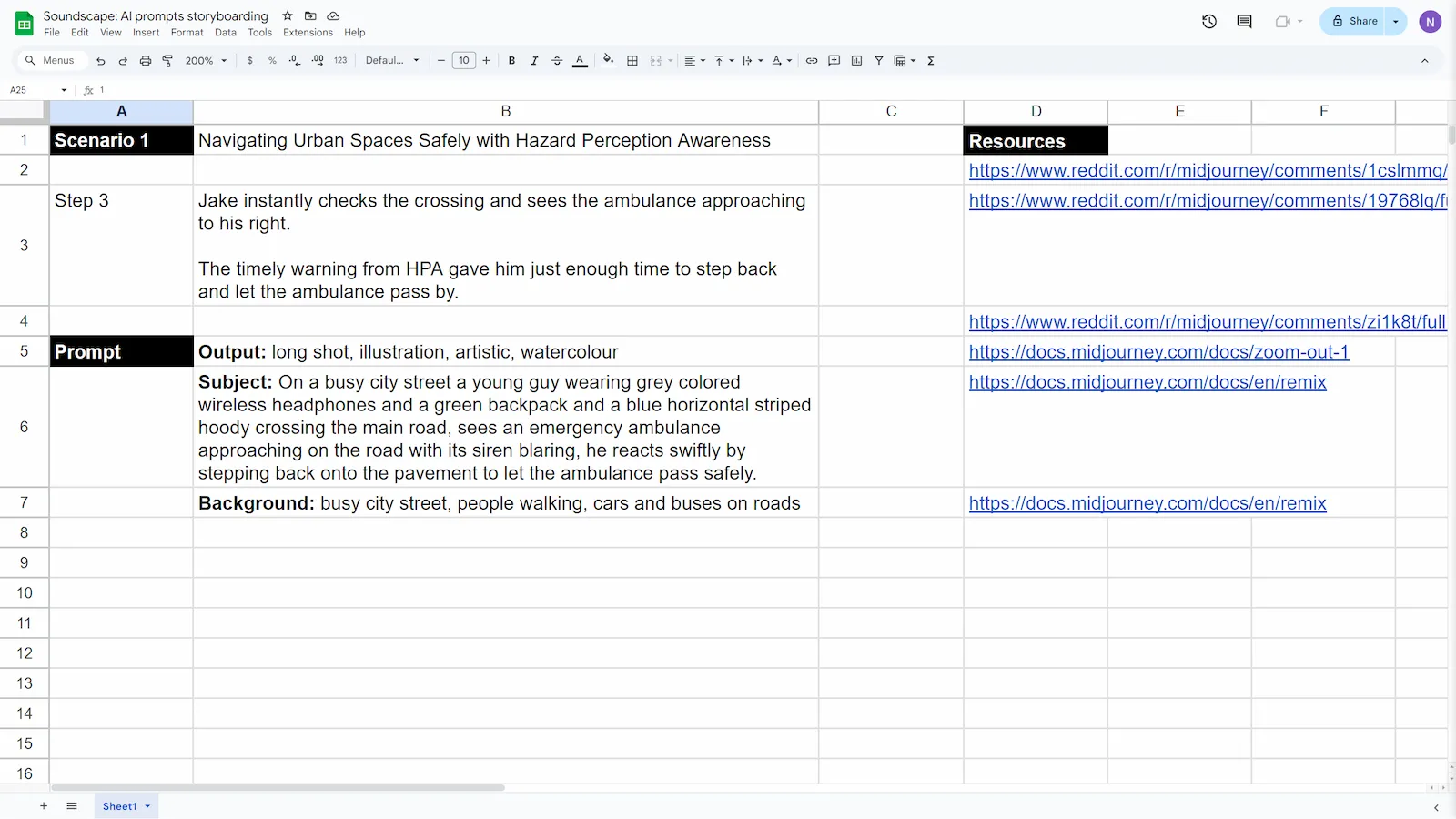

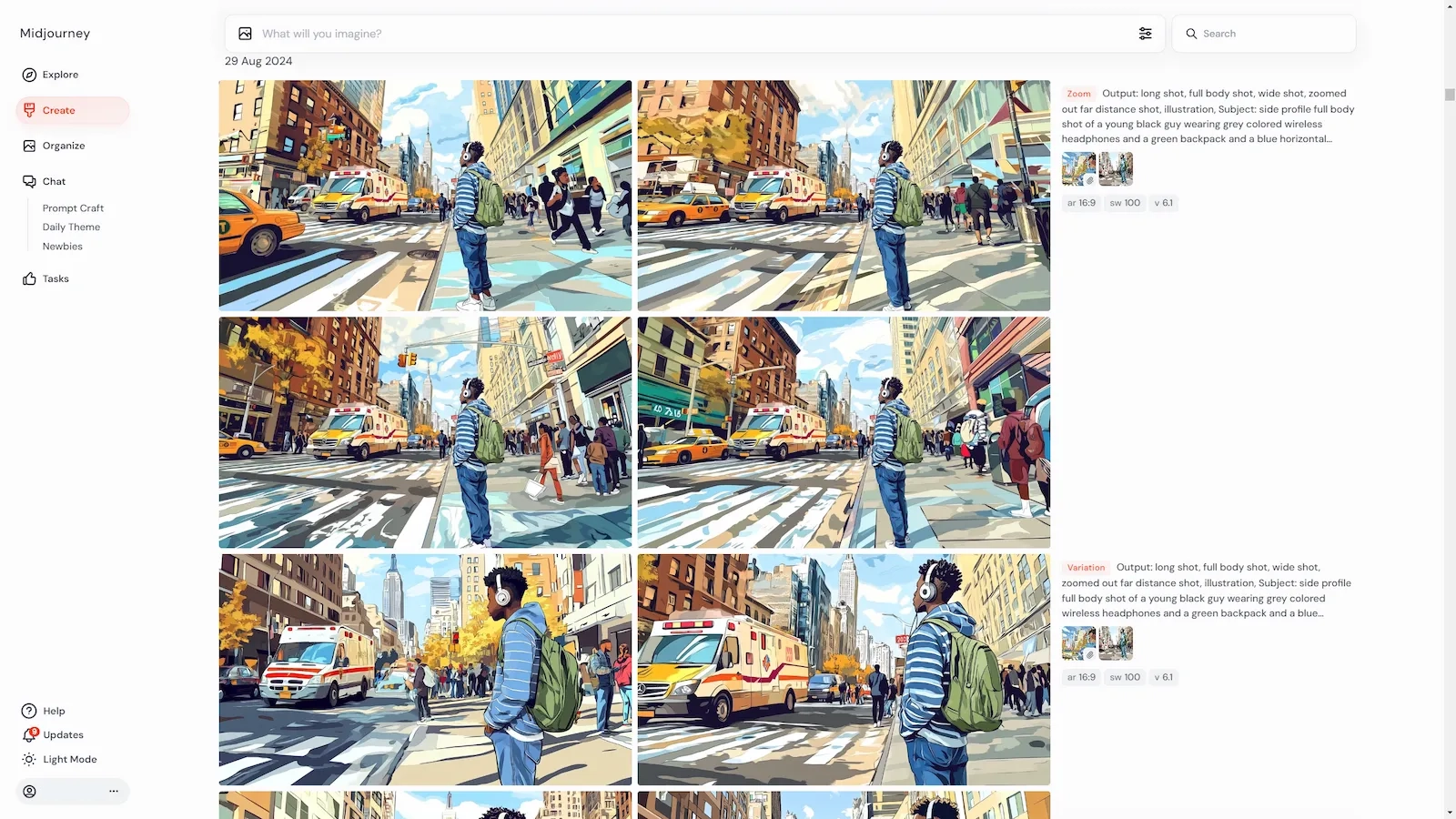

Storyboarding: using generative AI (Midjourney) to create images for storytelling

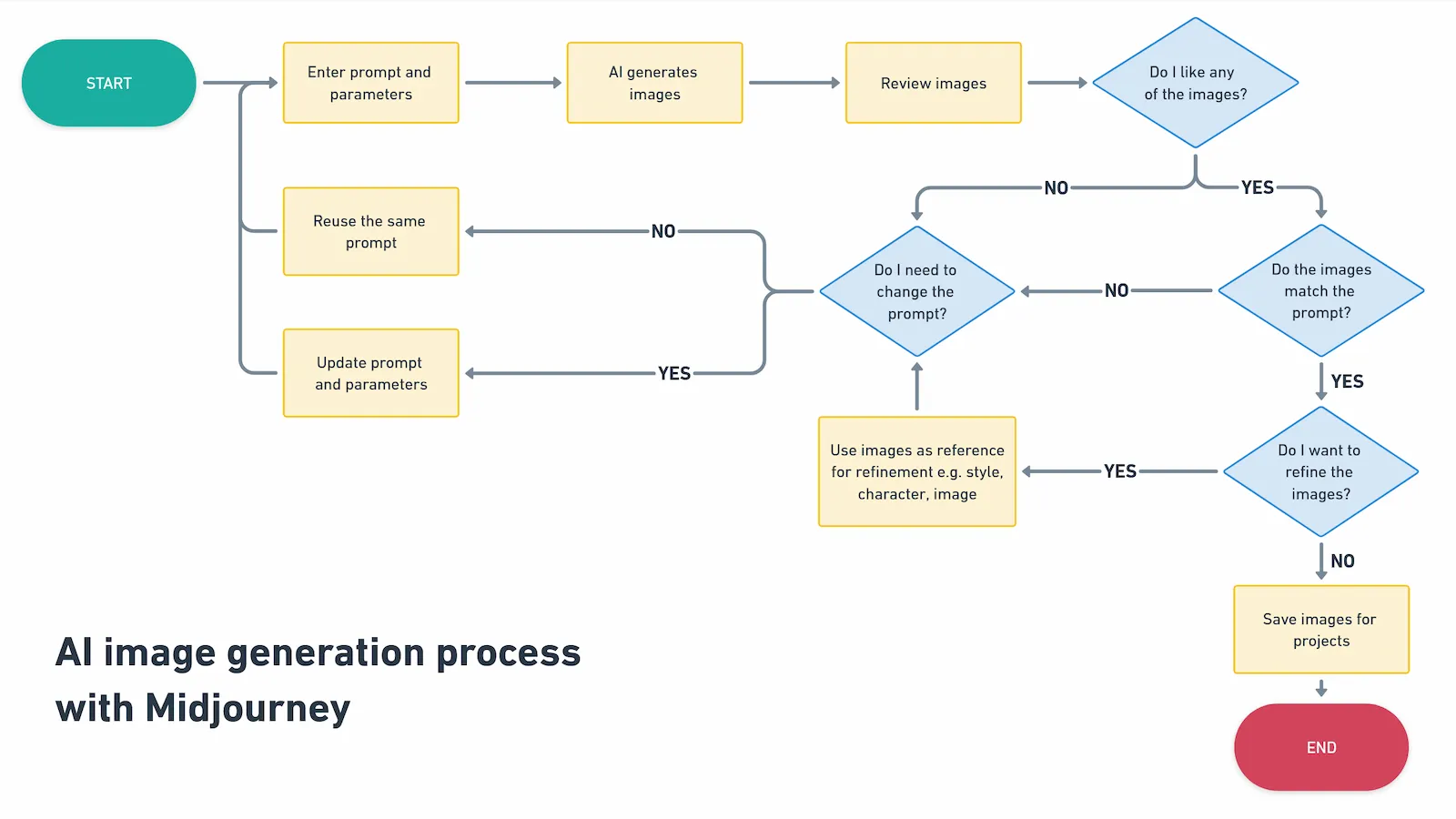

For an article series on multisensory design, I utilised generative AI (Midjourney) to create storyboard images. I learned that achieving characteristics like style, characters, and scenes matching our vision required a very detailed and structured prompt. Through experimentation, I found prompts needed to be specific so the AI could better interpret the output I wanted. Personally, it felt like I am the product owner and the AI being the designer, trying it's best to understand and create results that matches my instructions.